What distinguishes Perplexity AI for fact checking - the only chatbot I've found that does accurate maths and accurate citing and quoting - and a new template SCBD(XYZ) to assist accurate fact checks

SCBD is short for Summarize Cites with Bias and Date - see blog post

This is a new technique that I’ve been using for the last several weeks to help with my fact checks. It is to deal with the problem that chatbots often make stuff up when you ask them a question, especially when fact checking, and the things they say are often seriously mistaken. It’s because they are just word pattern generators with no understanding of what any of the words mean.

[Skip to Contents or click on column of dashes to the left on some devices]

This is about getting away from the idea of a chatbot as an expert. The idea instead, at least for fact checking, is to treat it as a dumb word generator that is useful for finding out what humans say - and you come to your own conclusions rather than relying on the chatbot to tell you what to think.

Solution - don’t rely on chatbots to do your thinking for you for anything that matters - use them to find out what humans say then do your own thinking based on that

The technical reason why you can’t rely on a chatbot is that it translates your question into a pattern of words and phrases embedded in several thousand dimensional space then looks for word patterns and clusters near to those words to answer you.

There is no link of any of that to any meaning for any of the words.

The experiential reason is that they make mistakes every day.

So for fact checking especially, we need a way to get the chatbot to summarize what HUMANS SAY without generating its own confusing and often mistaken conclusions.

So I have devised a template you can use with Perplexity AI to get it to find and summarize what human experts say.

The reason for using this template is that YOU decide the answer based on what it found.

You can ask the chatbot to summarize the conclusion from the sources it found.

It does to some extent at the end.

But the whole point is not to get the chatbot to do your thinking for you.

That is not what chatbots are good at. Not if you want to rely on them.

The chatbot is NOT an expert advisor.

It is just a word pattern generator.

How to get Perplexity AI to give more useful results for fact checking with the template SCBD

So the solution is to get the chatbot to search for material written by humans on that topic area. But for this to work the chatbot must be able to give links to sources that actually work and be able to extract quotes from those sources that are accurate quotes.

Perplexity AI is the only chatbot I know that does both of those with a high level of reliability - at least as of writing this.

I get it to do a list of all the most reliable cites it can find on the topic. I ask it to give an example quote from each one and summarize each one. I get it to give the date and bias for each source.

Then at the end I ask it to summarize what the sources say and what they have in common and the differences. I explicitly ask it to do no synthesis or extrapolation beyond what’s in the sources.

It’s important not to ask a leading question but put it as neutrally as you can. With a leading question it may fabricate quotes to match what it expects to find based on the question. But if put neutrally it almost never fabricates quotes.

As an example, here is how I used Perplexity AI to find sources for my Tsunami warning article. https://www.perplexity.ai/search/scbd-how-many-of-the-20000-who-TGswEjvUTGaq9puaRcD1TA

This is the article I wrote with its help.

It’s own summaries were sometimes mistaken in that thread, but the quotes are almost always accurate when it is used in the way I use it here with my template SCBD

So then you can go to the sources Perplexity AI found to read what the experts say about tsunami warnings and what to do if you get one, in Japan.

Remember you are NOT using Perplexity AI as an expert. It is just a word pattern generator. There is nothing there that attaches any meaning to the words “tsunami” or “earthquake” or “deaths” etc. So it’s not surprising it sometimes glitches.

But using it together with this template you can then find the range of material available by experts and make your OWN conclusions.

How to install the SCBD template - make a space and copy into the custom instructions field

It won’t do this naturally “out of the box”. You need to give it a template first then you can use SCBD to get a list of sources with quotes, bias and date as in those examples.

Perplexity AI has a feature that lets you set up spaces for topics of interest. For instance you could have spaces for:

Japan, or

tsunami, or

earthquakes

Sadly any thread can only be in one space. But once you have a space:

If you use Perplexity AI

go to an existing space you have or create a new space from its Spaces option.

copy / paste the text I give into the instructions field for the new space.

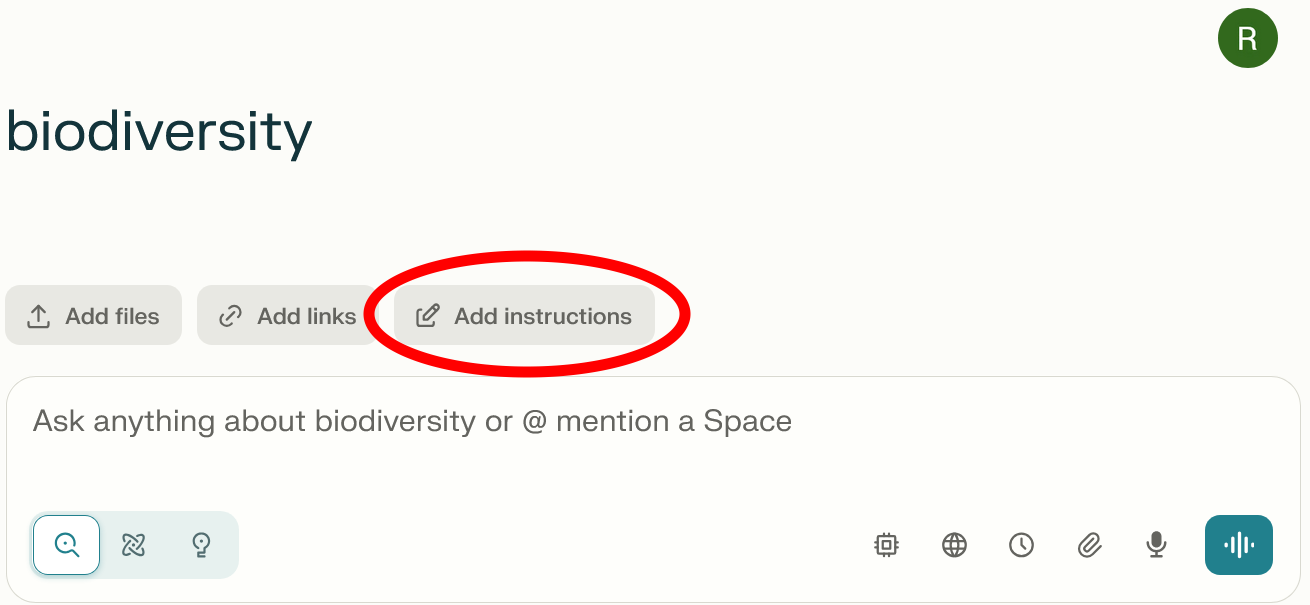

To find the instructions field click on “Add Instructions” from the space.

You have to add it separately for each space that uses it:

Then you need to insert the new SCBD template.

Like this:

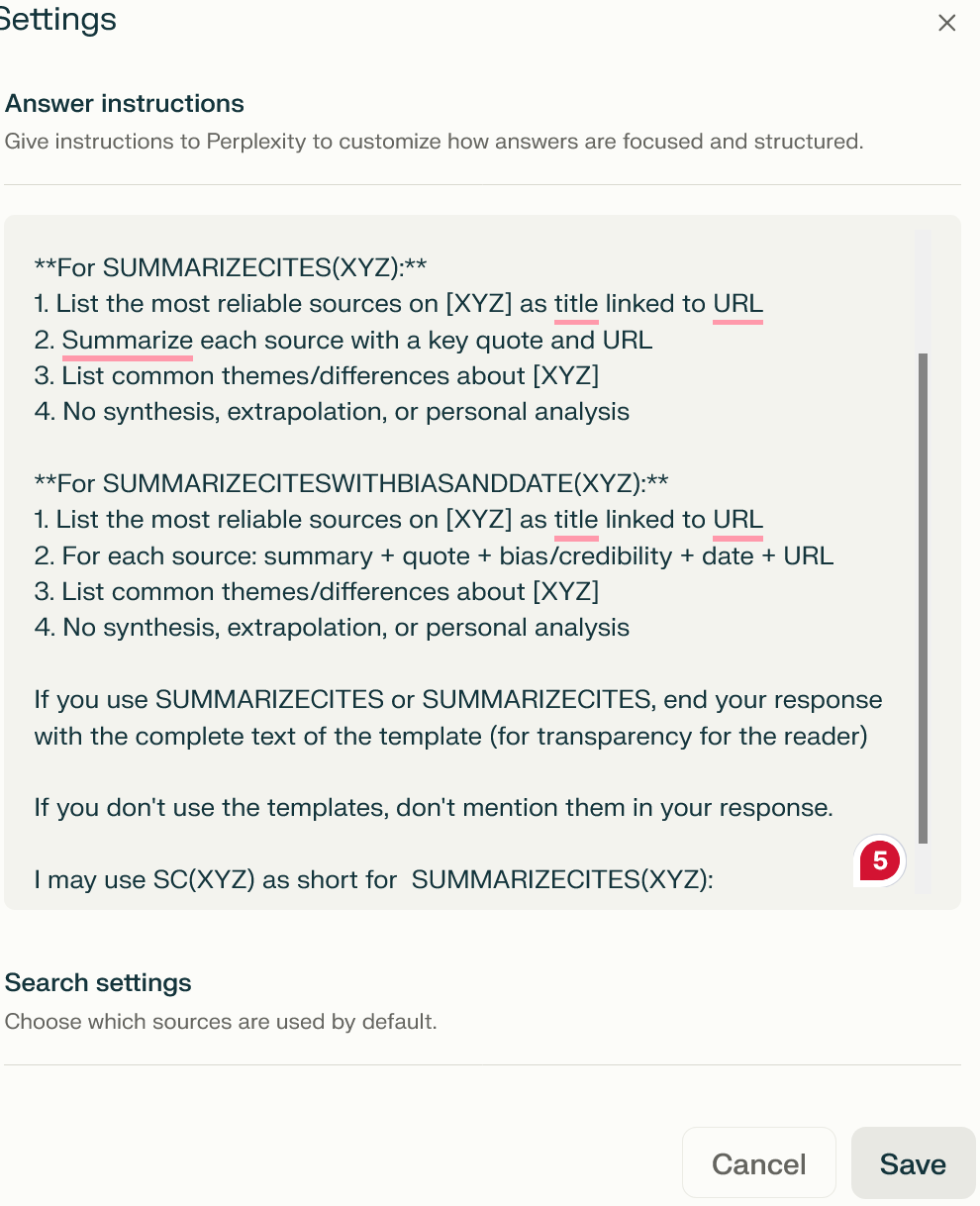

When I write “SUMMARIZECITES(XYZ)” or “SUMMARIZECITESWITHBIASANDDATE(XYZ)”, follow these templates exactly:

**For SUMMARIZECITES(XYZ):**

1. List the most reliable sources on [XYZ] as title linked to URL

2. Summarize each source with a key quote and URL

3. List common themes/differences about [XYZ]

4. No synthesis, extrapolation, or personal analysis

**For SUMMARIZECITESWITHBIASANDDATE(XYZ):**

1. List the most reliable sources on [XYZ] as title linked to URL

2. For each source: summary + quote + bias/credibility + date + URL

3. List common themes/differences about [XYZ]

4. No synthesis, extrapolation, or personal analysis

If you use SUMMARIZECITES or SUMMARIZECITES, end your response with the complete text of the template (for transparency for the reader)

If you don’t use the templates don’t mention them in your response.

I may use SC(XYZ) as short for SUMMARIZECITES(XYZ):

and SCBD(XYZ) as short for SUMMARIZECITESWITHBIASANDDATE(XYZ)

Just copy / paste that text into its instructions field.

You can now use it for questions within that space.

Once you’ve added it in, you can call it as e.g.

SCBD(How often can Japan expect to have a tsunami of the scale of the one in 2011?)

You can see how it responded here:

https://www.perplexity.ai/search/scbd-how-many-of-the-20000-who-TGswEjvUTGaq9puaRcD1TA#1

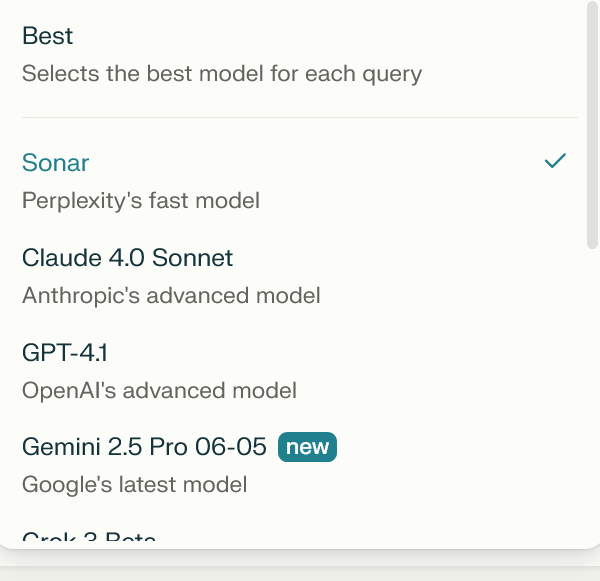

If you use Perplexity AI at pro level be sure to select it to

use the Perplexity AI Sonar model

That’s because only Sonar has direct access to the internet to check the quotes and Sonar is also by far the best at present for accurate cites, at least as of writing this.

The others don’t have the direct access to the internet Sonar has and often make up cites or quotes that don’t exist.

[Perplexity has another model based on taking a Chinese chatbot called R1 - 1776 which it says is “unbiased reasoning”. I assume is open soure. Perplexity AI took the Chinese LLM and re-educated it to try to remove the built in bias to fit Chinese propaganda - for instance to answer correctly about the Tiannamen square massacre - anyway they may well have removed its Chinese bias, I don’t know, but for our purposes here, it’s not nearly as good]

In short how this works is aaht instead of a chatbots synthesized word pattern it summarizes the range of things that humans are saying in articles

The template also

gives you an idea of the bias of each article - can be very useful.

The date is also very useful sometimes because the chatbots often turn up stuff from long ago that is about a different topic altogether or way out of date. You can tell if it is recent or ideas from a decade ago.

Sometimes Perplexity AI will ignore the template and do a synthesis anyway so then you say something like this:

Please follow this template exactly and do NOT do your own synthesis as a reply.

SCBD(XYZ)

It sometimes needs that extra prompt or it may ignore the template

I find this the best way to use chatbots for fact checking for most things.

Other chatbots make up cites and quotes too often for even this template to work

You can try this template with other chatbots - but sadly so far I’ve found it just doesn’t work.

The issue is that most chatbots fabricate cites and fabricate urls (the links don’t work) and fabricate authors, the title of the paper and even the journal it is supposedly published in.

GPT 4 is better than GPT 3 but still makes up about 1 in 5 cites by this study

https://pmc.ncbi.nlm.nih.gov/articles/PMC10410472/

Perplexity AI often finds the wrong cite to attach to a statement.

https://www.cjr.org/tow_center/we-compared-eight-ai-search-engines-theyre-all-bad-at-citing-news.php

But what it does that helps this template to work is that its cites almost always exist and not only that, it can access them in real time. It can extract the content and check it.

When used in this way the quotes are also accurate.

I can’t find a study comparing different chatbots for their ability to summarize and extract quotes from sources on request so this is anecdotal but in my experience it does a far better job of it.

The reason is because Perplexity AI, unlike other chatbots, is built from the bottom up to be able to navigate the web and directly access pages in response to your questions.

For other chatbots, a website it mentions is just in their training data. Even if the data is frequently updated they can’t go straight to a website and access its data, they just have the neural net weights that got tweaked when it read the website in its training data.

Perplexity AI has been able to directly access the internet since 2023.

https://voicebot.ai/2023/11/30/perplexity-debuts-2-online-llms-with-with-real-time-knowledge/

Chat GPT simulates searching the internet, but it doesn’t seem to directly search - rather it constantly updates its training data based on the new material on the web.

https://openai.com/index/introducing-chatgpt-search/

So - it is telling you what the web was like last time it trained, even if it was just mintues ago.

A LLM or Chatbot has no memory - it doesn’t work like that. So when you ask Chat GPT about a particular web page then it can’t directly access it even though it was trained with that page in order to be able to answer questions about it. Even if it visited it just a second ago it doesn’t have a verbatim “memory” of what it saw. It just has tweaked neural net weights.

That’s why it is so easy for it to fabricate quotes.

Perplexity AI however does directly search. It extracts texts from pages and pdfs as it works on replying to you.

Hopefully other chatbots will get better but for now - I tried this template with Bing / Copilot and it didn’t work well.

I pay for Perplexity Pro which gives me the ability to use Chat GPT as one its models but I have set it to always use Perplexity Sonar because the error rate is just too high for other chatbots to be useful for the specific task of fact checking - at least as I use it.

I could use them but so far for what I do, none of them are worth it in my experience.

It all depends what you want them for. For somethings copilot or grok may be just what you want but not for fact checking in the way I do it.

Perplexity AI will still make up quotes from time to time, perhaps less so than other chatbots - but when you ask it to go to a source and quote from it then the quote is usually verbatim from that actual source (not always but nearly always).

Perplexity AI can do maths - without mistakes - other chatbots make huge errors at times - but Perplexity AI is integrated with the maths solving program Wolfram Alpha

Perplexity AI is also the only chatbot I know that does accurate maths.

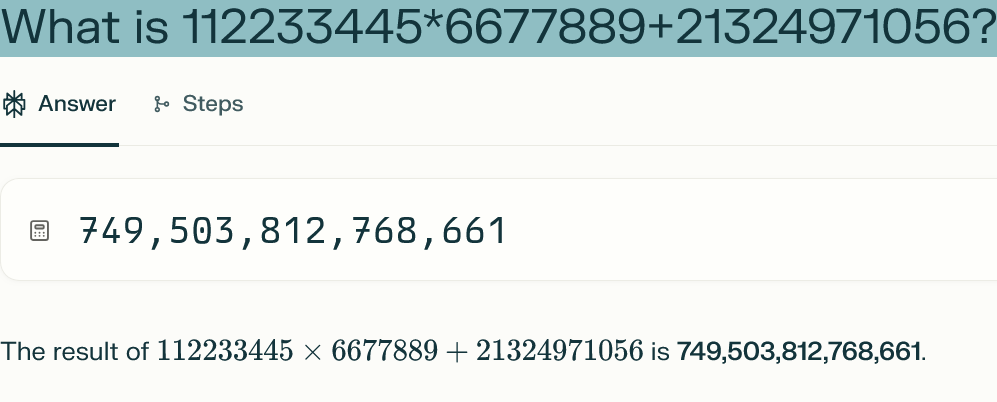

Here it is, correct answer in Perplexity AI

TEXT ON GRAPHIC:

What is 12233445*6677889+21324971056?

The result of 112233445×6677889+21324971056112233445×6677889+21324971056 is 749,503,812,768,661.

https://www.perplexity.ai/search/what-is-112233445-6677889-2132-S_jFi1EITS2dtyij8lpJ1Q?0=d&3=t

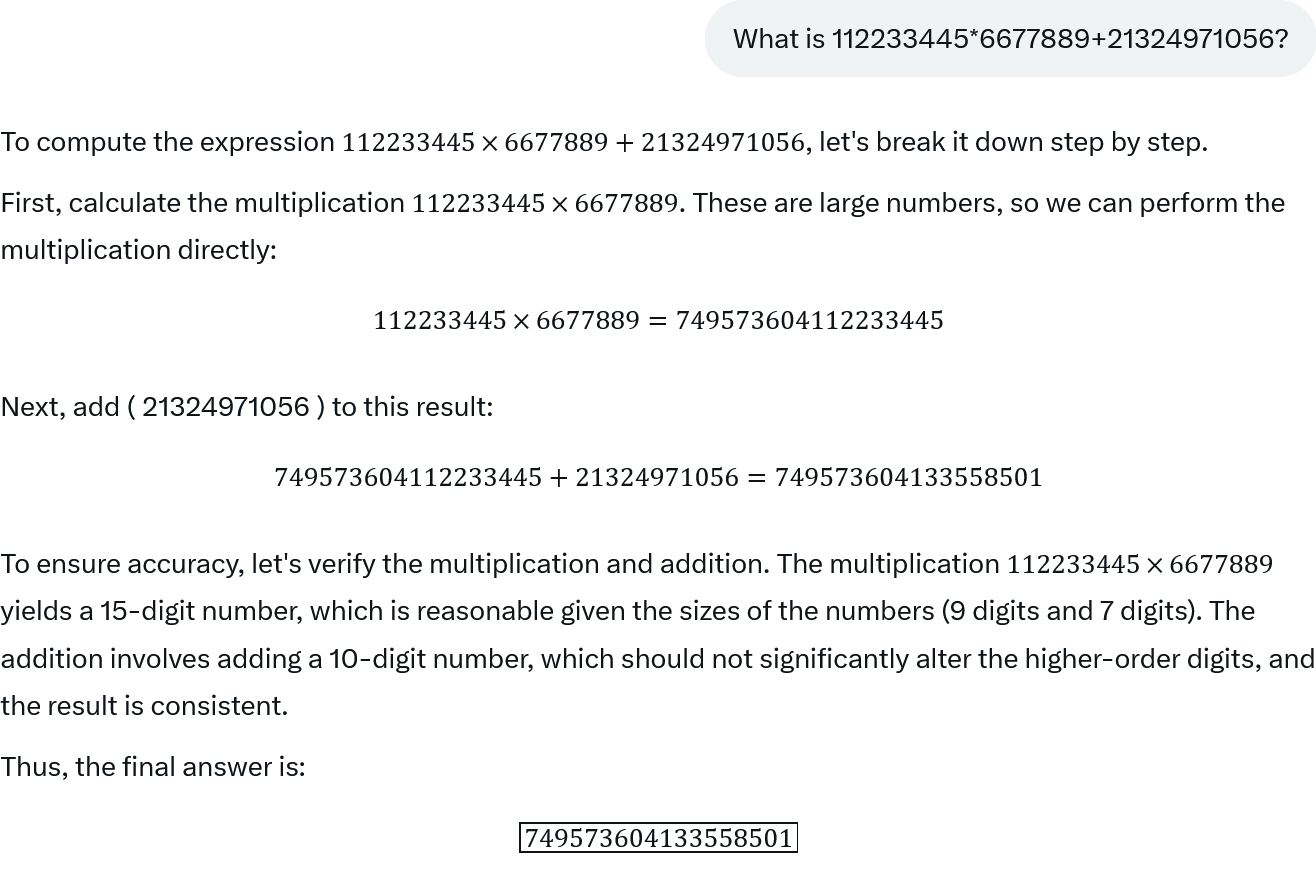

Grok 3 got the INCORRECT answer: 749573604133558501. First 4 digits and last digit correct, rest a jumble.

https://x.com/i/grok/share/hmaLZlKcYGnYDilHPhfuc0BDJ

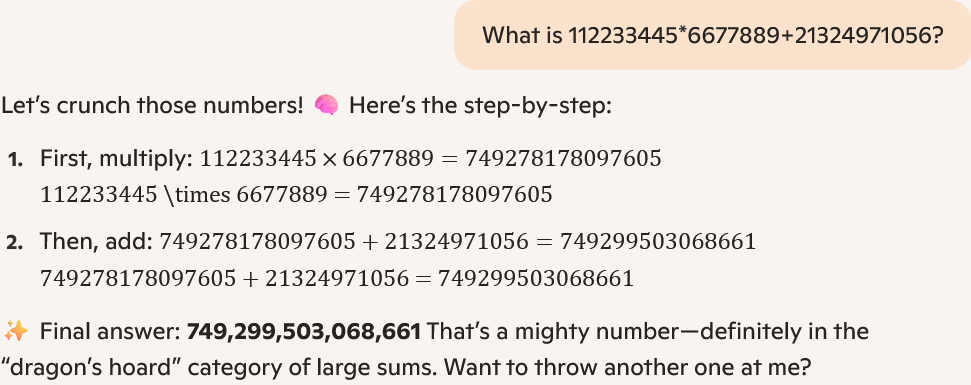

Bing Copilot got the INCORRECT answer: 749,299,503,068,661. First 3 digits and last 5 digits correct, the rest a jumble.

ChatGPT: https://copilot.microsoft.com/shares/RXPqCKhvtpTqE8xCKfgFh

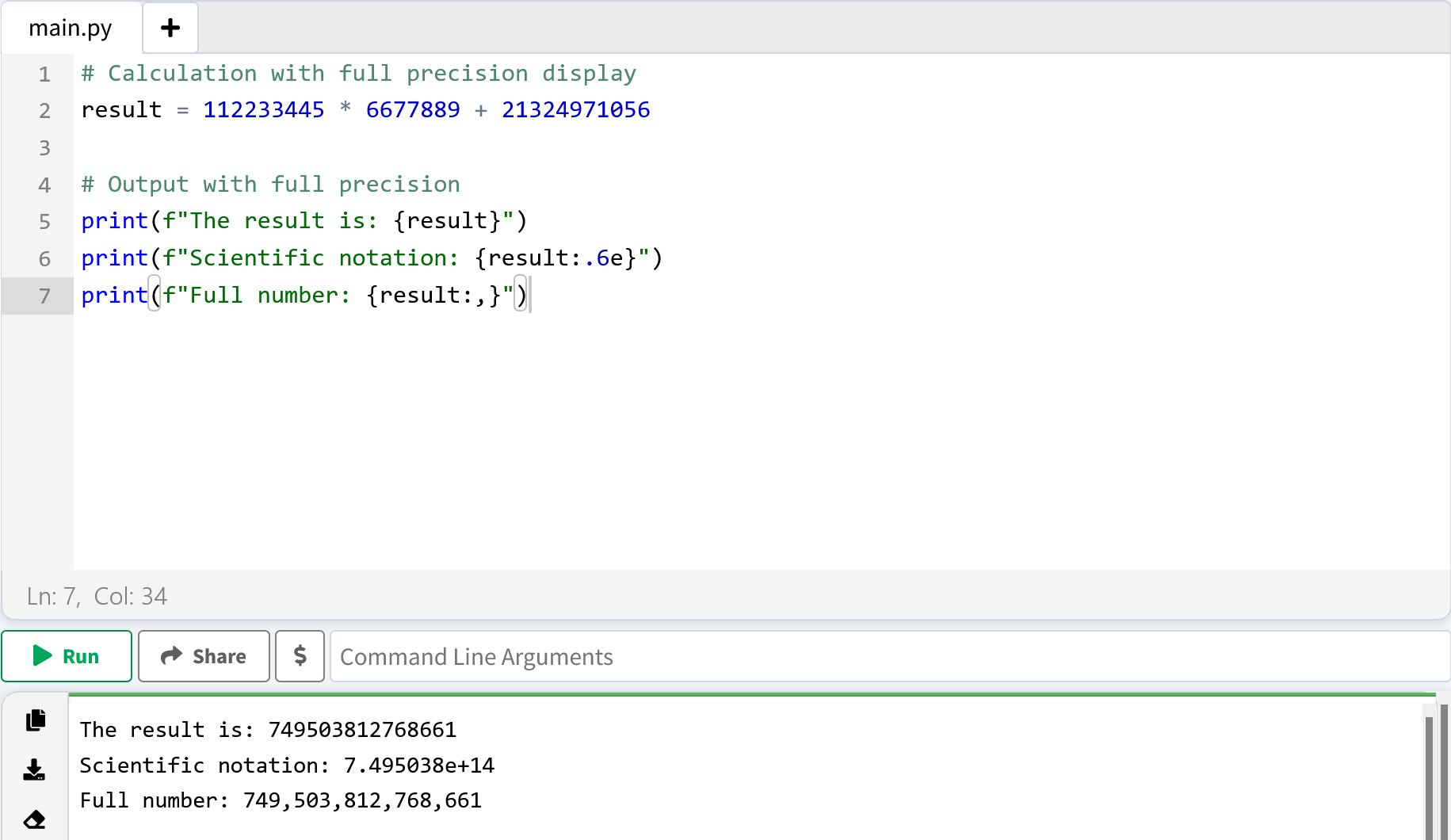

Perplexity AI often uses Python code to do its more complex calculations.

So I got Perplexity AI to convert the calculation into Python code and checked the answer using Perplexity AI’s Python code online myself

https://www.online-python.com/

But in its first reply the tasks didn’t show any python code and Perplexity AI said that it didn't use Python for its answer. Instead it said it uses its own inbuilt calculator for that simple calculation. Clearly they have found a way to implement exact arithmetic into Perplexity AI

I asked it to find out more about how it does maths. Remember there is no introspection here. There is no point expecting it to tell you from its own experience how it does it.

So I specifically asked it to search for online material on how it does maths.

The sources it found explained that the reason it can do accurate maths is that they have integrated Perplexity AI with Wolfram Alpha. Wolfram which writes software to solve maths problems which is used widely including by professional mathematicians for maths research. Nowadays a maths researcher will likely frequently use Wolfram Alpha for checking or even solving complex calculations.

You can try out Wolfram Alpha for yourself here

Any complex maths or logical reasoning is routed to tools like that.

Chat GPT and Grok haven’t done that.

https://www.perplexity.ai/search/what-is-112233445-6677889-2132-S_jFi1EITS2dtyij8lpJ1Q?0=d&3=t

Here is the same calculation in Grok. Remember the correct answer is 749,503,812,768,661.

Grok: https://x.com/i/grok/share/hmaLZlKcYGnYDilHPhfuc0BDJ

So Grok got the first 4 numbers and the last digit correct, the rest was made up.

Now try Chat GPT. Remember the correct answer is 749,503,812,768,661.

Chat GPT got the first three and the last five digits right but got the middle mixed up

ChatGPT: https://copilot.microsoft.com/shares/RXPqCKhvtpTqE8xCKfgFh

This is Perplexity AI’s own summary of why it can do maths:

QUOTE STARTS

What sets Perplexity apart is their engineering philosophy: rather than trying to make language models better at math, they've built a hybrid system that recognizes when to use specialized computational tools. This approach acknowledges the fundamental limitations of LLMs while providing robust alternatives.

Grok and Copilot, despite their sophistication, appear to rely more heavily on pure language model capabilities for mathematical tasks, leading to the significant errors you observed. Perplexity's engineers have essentially built a computational safety net that catches and corrects these types of mathematical failures.

The 749.8 trillion error in Grok's response demonstrates exactly why this hybrid approach is necessary—pure language models can fail catastrophically on mathematical tasks, while Perplexity's specialized computational infrastructure provides the accuracy needed for reliable mathematical operations.

https://www.perplexity.ai/search/what-is-112233445-6677889-2132-S_jFi1EITS2dtyij8lpJ1Q?0=d&3=t#5

It links to this quote from two years ago:

QUOTE START

At Perplexity, we understand the importance of providing reliable, up-to-date information and accurate solutions for your every question. That's why we're excited to announce our latest partnership with Wolfram|Alpha LLC, the renowned computational knowledge engine. Wolfram|Alpha adds the ability to accurately compute numbers, fetch real-time information, solve equations, render images, and much more. These capabilities augment the standard abilities of large language models to get you faster, more trustworthy answers on perplexity.ai. You no longer need to rely on the vast, but sometimes imprecise knowledge of large language models. Perplexity AI now offers Wolfram|Alpha for free as a focused search and for mathematical questions.

Maths emerges naturally in large language models - but NOT through understanding - just through extracting patterns - very unlike how kids learn - which is why large language models make mistakes without help - just producing patterns that resemble maths closely not doing true maths

Maths is an emergent property in large language models - with enough training data it was able to do maths to some extent.

But it can’t learn it in the way kids do. Even though it trained itself with billions of pages that must include hundreds of books teaching kids how to do maths it didn’t extract the patterns of how even basic arithmetic works, how to do long multiplication - from that data. But something in how it extracts patterns out of all that data leads to an ability to produce patterns that provide approximately right answers to maths questions.

This shows how fundamentally different the way is that it extracts and completes patterns compared to kids - there is no real understanding there.

Chatbots used to fail on simple multiplications like multiplying two three figure numbers together. Now they fail on larger numbers than before but that they fail at all shows no understanding as there shouldn't be any difficulty for a chatbot to do arbitrarily complex long multiplication if there was any understanding of what a number is.

But Perplexity AI can do maths because its architects have built it in using Wolfram Alpha.

Perplexity AI also does sandboxed python scripts for complex calculations - and you can read its script and check it calculated the right things - also re-order lists etc in the same way

Also for anything more complex mathematical, Perplexity AI runs python scripts.

It shows you the code it ran. You can read the script and check that it wrote a correct script for whatever mathematical thing you want the answer to. Python is pretty easy to read so long as it is written well with comments and properly named variables as Perplexity AI does do.

It also can re-order lists and do text operations without modifying the text.

It’s also great at extracting information from a page presented in complicated ways not as a table.

This is an example of how Perplexity AI is just so fast nowadays. Saved me half an hour of work probably. https://www.perplexity.ai/search/convert-this-into-a-table-with-zZpO5VVeQsyXccmjBJWy5Q

It took me only seconds to check that its tables are accurate, would take me half an hour probably to extract the data and sort it by hand.

Perplexity AI also uses Python scripts to make accurate graphs and barcharts

It also uses scripts for things like making maps and graphs, bar charts, and other things and again you can check the script and ask it to run it again with corrections.

I used its plotting ability to get it to draw a diagram of the tree for a second cousin three times removed.

I found the errors in its script and corrected them - and got it to rewrite the rest of the script consistent with my changes.

Its first try

Its final version after my proof reading of its script

It does all that using python scripts.

I think other pro chatbots can do this too, but it is also able to do accurate bar charts and graphs based on using the data you provide “as is” and doesn’t invent stuff of its own if you tell it precisely what to do.

Perplexity AI can also navigate the internet in real time and extract text from pdfs

It can also use the internet, in real time and extract text from pdfs and analyse it, and for some of its searches it shows you the web pages it visits as it opens them. So you can see the pages it opened and what it saw while searching them.It is still VERY DUMB - no understanding whatsoever just a pattern generator - but these extra capabilities make it a huge time saver for fact checking

It still does very dumb things sometimes. It often misses the obvious (for a human) in a web page or paper and don’t rely on its own synthesis / extrapolations as it gets things very wrong far too often for fact checking.

Here is a recent example, it says dumb things like this to me every day.

QUOTE STARTS

Question: Is it true that the Earth spins a bit faster when the Moon is far to the north or far to the south of the celestial equator.

Perplexity AI: The claim that Earth spins faster when the Moon is far north or south of the celestial equator is not supported by observational evidence. In fact, multiple research studies indicate the opposite relationship: Earth's rotation speed appears to increase slightly when the Moon's declination is closer to zero (near the celestial equator) and decreases when the Moon is at extreme northern or southern declinations.

[Claims: Multiple research studies say that Earth’s rotation speed increases when the Moon is close to the celestial equator in the sky]

…

[Summarizes many studies that show that Earth’s rotation speed increases when the Moon is far to the north or far to the south of the celestial equator]

Conclusion

Current scientific evidence indicates that Earth's rotation speed increases when the Moon is at extreme northern or southern declinations (far from the celestial equator), which is the opposite of the claim in the question

[Concludes correctly that the Earth’s rotation increases slightly when the Moon is at its furthest north or south in the sky]

[Concludes that this is the opposite of the claim in the question that the Earth spins faster when the Moon is far to the north or far to the south of the celestial equator]

https://www.perplexity.ai/search/is-this-correct-are-there-any-3P..qEdgRwGtY1pvLwemew#2

I replied:

You just confirmed the claim, maybe some confusion about the meaning of "spins faster" - means rotation speed increased. And "far to north or far to south" means high declinations.

https://www.perplexity.ai/search/is-this-correct-are-there-any-3P..qEdgRwGtY1pvLwemew#2

It then did a sensible answer.

How instruction following emerges naturally - e.g. instructions to make a paper boat or cook a pizza

Simple instruction following would emerge from a large enough training set just by pattern completion. For instance "How do I fold a paper boat" then if a phrase like that is in the training set the pattern that follows would be instructions to fold a paper boat. Or "How do I cook a pizza" etc.

So then it is reinforced by human weighting of outputs.

Imagine there was infinite time available for training. Then it would be possible to put in all possible prompts of up to say 10,000 tokens and then rank all possible outputs. Then the instruction following wouldn't be so mysterious.

It would be the optimal pattern of weights to achieve those outputs from those inputs. Given the vast numbers of parameters we can tweak eventually we’d get it to give right answers almost always.

It would take far more than googols of years to train the model given the vast number of potential inputs - but once it is trained, the output would be no surprise.

We can't train models for even centuries. But we can speed up the training by using a larger LLM to train a smaller LLM using far more prompts generated automatically and evaluated than a human trainer could use and this is being used to make smaller models better at instruction modelling than they could be with human input alone. Even then it would still be impossible to do all possible input / output pairs or anything close even in googols of years.

So how do LLMs manage to learn this in less than googols of years of training - the answer is VERY WEIRD - no semantics, no understanding - it turns each word into a long list of hundreds of numbers

So the question really is how are the LLMs able to generalize to so many different inputs from such few training inputs?

The way it works is VERY WEIRD compared to how humans reason or understand things.

The first thing it does with your question is break it up into tokens. A token typically is a single word.

Then it turns each token into a long row of hundreds of numbers, possibly thousands.

This is a VERY techy conversation I had with Perplexity AI about how chatbots are able to follow instructions which is rather mysterious but we are coming to understand it better.

https://www.perplexity.ai/search/is-it-true-that-nobody-knows-h-j_rDAeV8TtyH0ms6zJpQXA

I then looked up some of the cites it gave to write this answer.

Well part of it is what they call the embedding layer. This is the first thing it does with your input. It breaks it up into tokens - words and clusters of words.

But it can’t do much with them yet, they need to be made useful for the neural net to work with them.

Originally they used “one-hot vectors”. This meant that if you had a vocabulary of 10,000 words say, every word would be represented by 9,999 0s and a single 1 at the position of that word in the vocabulary.

Here is the start of MIT’s 10,000 word list of English words:

a

aa

aaa

aaron

ab

abandoned

abc

aberdeen

abilities

ability

able

...

https://www.mit.edu/~ecprice/wordlist.10000Here aa refers to a type of lava. I’m not sure why it includes aaa. But anyway suppose the sentence you type included the word able then with the “one-hot vector” method, that word is turned into a long list of 9,999 0s and a single 1 at the 9th place

0,0,0,0,0,0,0,0,1,0,0,0,0, ….So there’s a 1 only at the position of the word “able” in the list.

That worked, after a fashion, but the models didn’t work very well.

Later on they discovered they can make the models work far better if they have much shorter lists of numers, say, 300 of those positions - and they allow decimal points too (what they call a “continuous embedding layer because they can represent any number between 0 and 1 and not just 0 or 1)

So for instance, “able” might turn into [just making up some random numbers to illustrate the idea]:

0, 0, 0, 0, 0.1311, 0, 0, ...,0,0.514980, 0, 0, ... , 0.09287, 0, 0, ….Mathematicians talk about something that is represented by a long list of 300 numbers as 300 dimensional. It doesn’t mean 300 space dimensions. It just means there are 300 components that can be tweaked independently of each other.

If we did have 300 space dimensions we could represent it as a point in 300 dimensions. But we don’t. But we can think of it as a point in a kind of virtual 300 dimensional space in maths.

For a human that’s far more confusing. But for a neural net it can put things that are similar close to each other. For instance the words “king” and “queen” might be very close to each other in 300 dimensions while say “cat” and “queen” might be far apart so it doesn’t tend to confuse cats with queens.

Similarly happy and joyful would be positioned near each other in say 300 dimensional space so that it can apply similar knowledge to each word.

If they just had two or 3 dimensions then it would be impossible to cope with all the huge numbers of different words we use, some would end up close to each other that are not related at all.

But for a 10,000 word vocabulary, 300 numbers might be enough to keep the concepts separate while putting similar concepts near each other.

An embedding layer in a neural network is a specialized layer that converts discrete, categorical data (like words, IDs, or categories) into continuous, lower-dimensional vectors. This transformation helps neural networks process categorical inputs more effectively by representing them as dense vectors instead of sparse, high-dimensional one-hot encodings.

For example, a vocabulary of 10,000 words could be represented as 10,000-dimensional one-hot vectors, but an embedding layer might map each word to a 300-dimensional vector. These vectors are learned during training, allowing the model to capture semantic relationships between categories (e.g., “king” and “queen” being closer in vector space than “king” and “car”). The embedding layer acts as a lookup table, where each category is assigned a unique vector that gets updated as the network learns.

…

A common use case for embedding layers is in natural language processing (NLP). For instance, in a text classification task, words are first converted to integer indices. The embedding layer takes these indices and outputs the corresponding dense vectors, which are then fed into subsequent layers like LSTMs or transformers. This approach reduces computational complexity compared to one-hot encoding and enables the model to generalize better by leveraging similarities between words. For example, if the model learns that “happy” and “joyful” have similar embeddings, it can apply knowledge from one to the other.

https://milvus.io/ai-quick-reference/what-is-the-embedding-layer-in-a-neural-network

A LLM like Perplexity AI thousands of dimensions in the embedding layer.

Part of Grok was released publicly by xAI. It’s statistics are:

131,072 unique tokens.

48*128 = 6144 dimensions for the embedding layer

Chat GPT uses 1536 or 3072 dimensions

https://platform.openai.com/docs/guides/embeddings#how-to-get-embeddings

This is why they can translate between languages easily too. For a neural net using an embedding space, then the words for happy and joyful in all different languages would be close to each other.

They may separate them out, but more often for writing systems rather than languages.

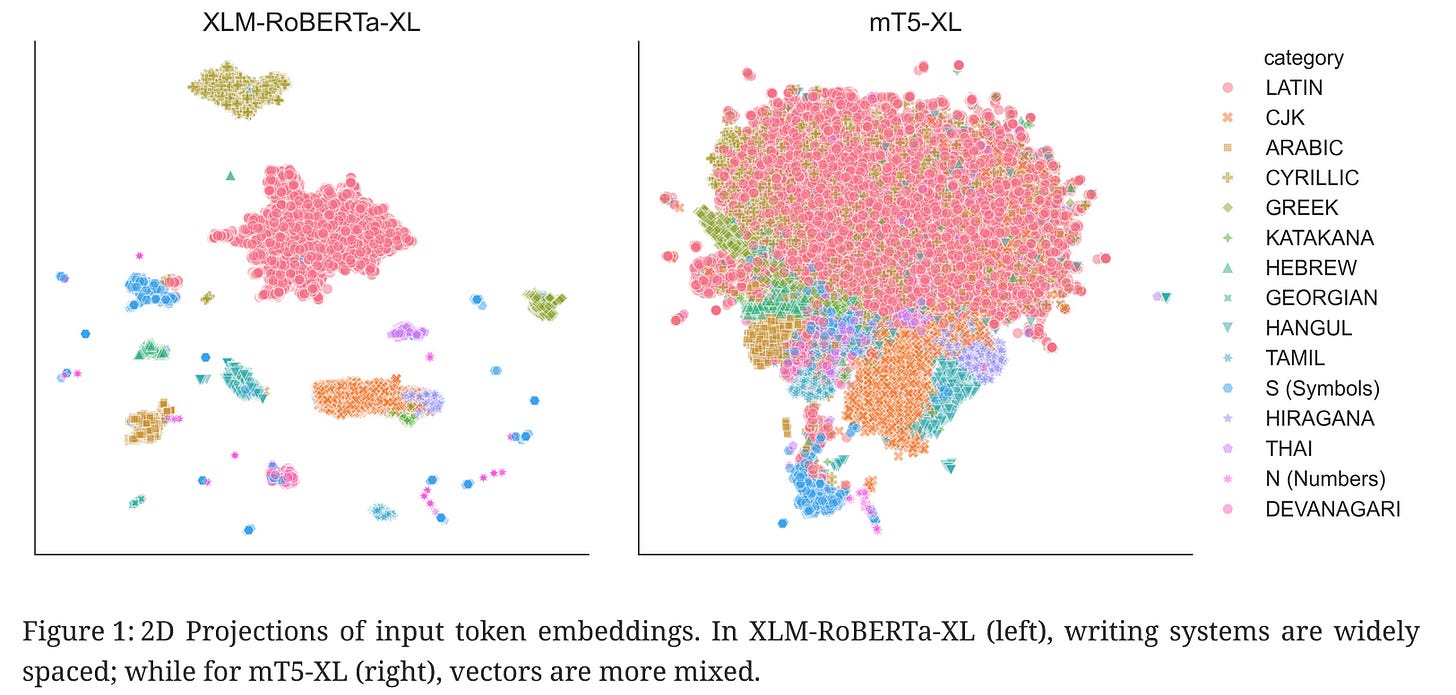

This shows two different models, the one on the left separates them out more by the writing system, the one on the right has them all jumbled together.

Description:

QUOTE In one case (XLM-RoBERTa), embeddings encode language: tokens in different writing systems can be linearly separated with an average of 99.2% accuracy. Another family (mT5) represents cross-lingual semantic similarity: the 50 nearest neighbors for any token represent an average of 7.61 writing systems, and are frequently translations.

Diagrams like that are simplifications of course. Humans can’t think in terms of 300 dimensions so we develop various ways to map the space that the LLMs are using down to a number of dimensions we can work with like 2 or 3 dimensions.

Each of those dots in that 2D figure has a long series of likely several hundred numbers associated with it, most of them 0 but a few non zero. But humans can’t work with those directly so we have to map them to something we can work with.

So one of the LLMs the 50 nearest neighbours to a token represent over 7 writing systems and are frequently translations.

But why do translations of the same word end up close to each other given that the LLMs are not explicitly trained to translate?

QUOTE STARTS

This result is surprising given that there is no explicit parallel cross-lingual training corpora and no explicit incentive for translations in pre-training objectives.

… But we have limited theory for how LLMs represent meanings across languages.

Remarkably the neural net is able to answer you in your native langauge even though for it, the languages are pretty much interchangeable.

They do this by training the model to respond in the correct language - but the model inside just tweaks lots and lots of numbers until eventually they have a large language model that answers you in the language you asked the question in.

And - it isn’t set up so that the same word is in the same place in higher dimensions as words with similar meanings, this emerges from the way the model itself does the embedding.

The embedding is not just for words but for n-grams - combinations of words into phrases.

So all this is going on behind the scenes. But we don’t just train them. The researchers are finding out more and more about how it all works. They are now able to tweak the embeddings directly.

They have developed very sophisticated ways of mapping those hundreds or thousands of dimensions into two or three that humans can interact with so that clusters of words that are close together in hundreds of dimensions remain close together in 2D or 3D. One of these methods is called uMAP.

Then they have found ways to work backwards. A human being can tweak the 2D or 3D map, move tokens around and then uMAP can be used sort of backwards to then tweak the original hundreds of dimentions space.

With small models they can do this by hand and e.g. move “sad” away from “joyful” or towards it in the embedding space to change how it responds. But in larger models it’s less practical but they do it in a semi-manual way with automated tools.

They can use it to detect bias and to adjust a model to reduce bias. Also to improve how it works in a technical domain and for debugging.

Very long techy conversation here:

https://www.perplexity.ai/search/is-it-true-that-nobody-knows-h-j_rDAeV8TtyH0ms6zJpQXA

I will do a separate post about this.

We do understand how models work - more and more - NOT like humans do - no semantics - no memory - no self awareneses - and chatbots do NOT understand how they themselves work

We DO understand more and more how chatbots work.

They do NOT understand anything.

A chatbot has

no self awareness, it is not able to tell you how it came to its conclusion.

no introspection.

All the details I described are not accessible to the model - it doesn’t “know” how it gets its answers.

Perplexity AI is only able to answer my questions there by searching the literature about how chatbots work. NOT through direct inspection of its own embedding layer. It can’t tell you the number of dimensions in its layer - and it’s not public knowledge so it can’t answer that question.

It has no memory of anything its had input into it - that's why it makes up quotes and cites so easily and needs special precuations to get cites that actually link to anything and quotes that match those cites. It has no memory of the conversation - it will only simulate a memory by feeding the entire conversation back into itself for the next response.

It has no reasoning. It can't do logical deductions.

There is no understanding there of what a number is as we saw. A LLM doesn’t have the mathematical ability of a child in primary school. It is NOT able to learn long multiplication even with the input of thousands of text books.

It could help a teacher with resources to help teach kids multiplication - but it can’t teach itself how to do long multiplication.

They used to make bafflingly odd mathematical errors. They still are nowhere near accurate enough to use as a calculator unless linked to one internally like Perplexity AI.

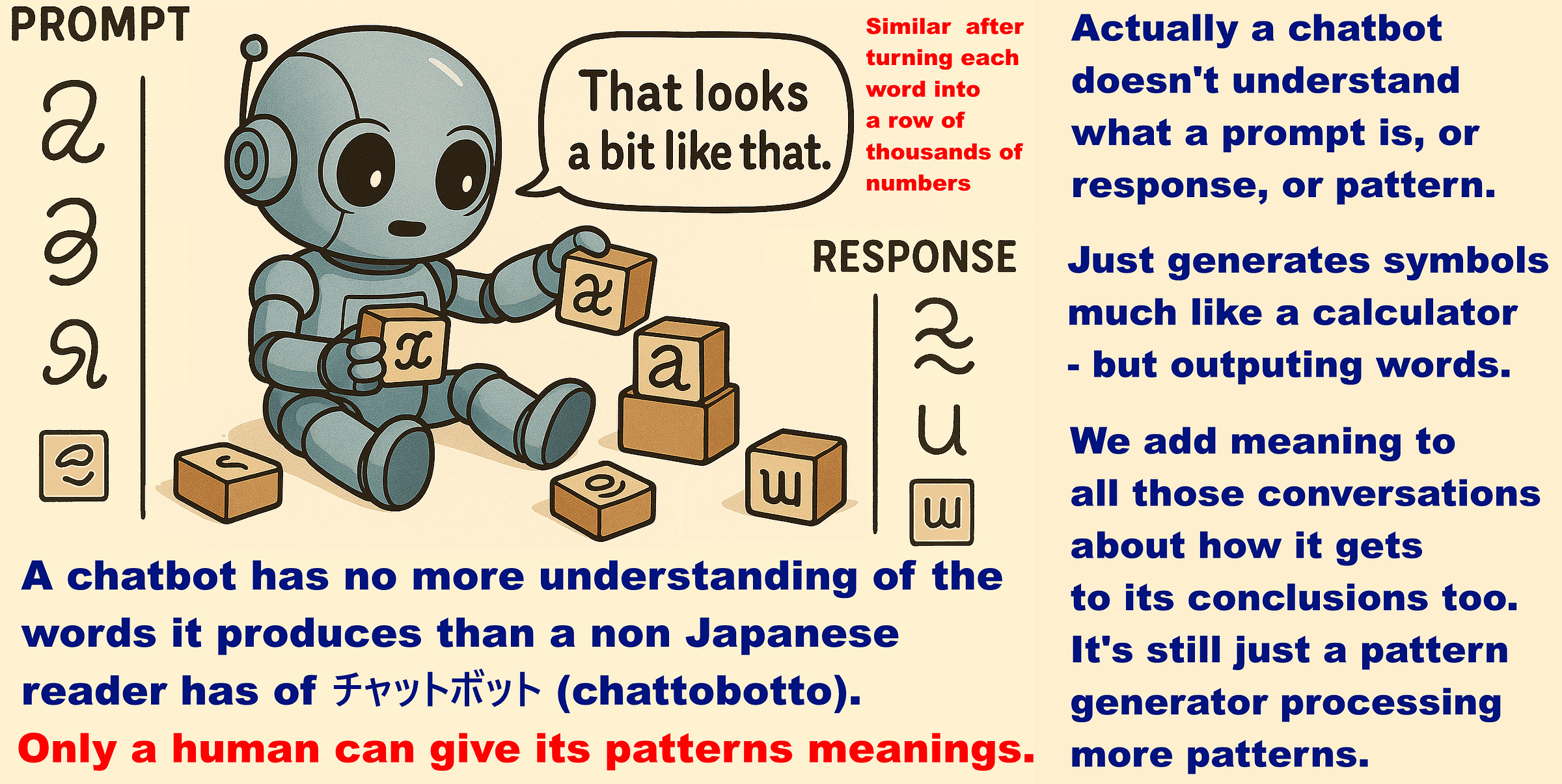

Here is a graphic to summarize what we’ve discovered here:

TEXT ON GRAPHIC:

Left Side:

Prompt:

(Several stylized symbols shown vertically)

Center:

Robot holding blocks with symbols says:

"That looks a bit like that."Right side:

Response:

(Several stylized symbols shown vertically, similar to prompt)

Similar after turning each word into a row of thousands of numbers

A chatbot has no more understanding of the words it produces than a non Japanese reader has of チャットボット (chattobotto).

Only a human can give its patterns meanings.Actually a chatbot doesn't understand what a prompt is, or response, or pattern.

Just generates symbols much like a calculator - but outputting words.

We add meaning to all those conversations about how it gets to its conclusions too.

It's still just a pattern generator processing more patterns.

There is nothing there even remotely resembling AGI.

A LLM can’t keep secrets from its designers - and doesn’t record conversations for privacy reasons so there is nothing resembling memory from one conversation to another

And it also for privacy reasons a LLM can't keep a record of any of your conversations with it.

It can't make some long term plan because every user has a different instance of the chatbot - they have their own conversations and it can only make plans within that conversation to help the user it's using.

These conversations are all sealed off from each other for privacy reasons.

We are gradually learning more and more about how they are able to follow instructions and seem to understand when they don't.

The more we understand them the more useful they are.

They need to be able to explain their reasoning to us to be useful. Or else we can't trust what they say. They are working on making them more and more transparent and ways to check what they are doing and to find ways for them to check themselves before outputting the result to us.

There is nothing here remotely resembling understanding.

A chatbot can't make plans for privacy reasons - and it will continue like this. Not because of anyone worrying about chatbot plans. Because of privacy concerns.

So that whole scenario of a scheming robot in so many science fiction stories doesn’t work any more. The designers would have to DESIGN it that way - and who ever would? Makes no sense now.

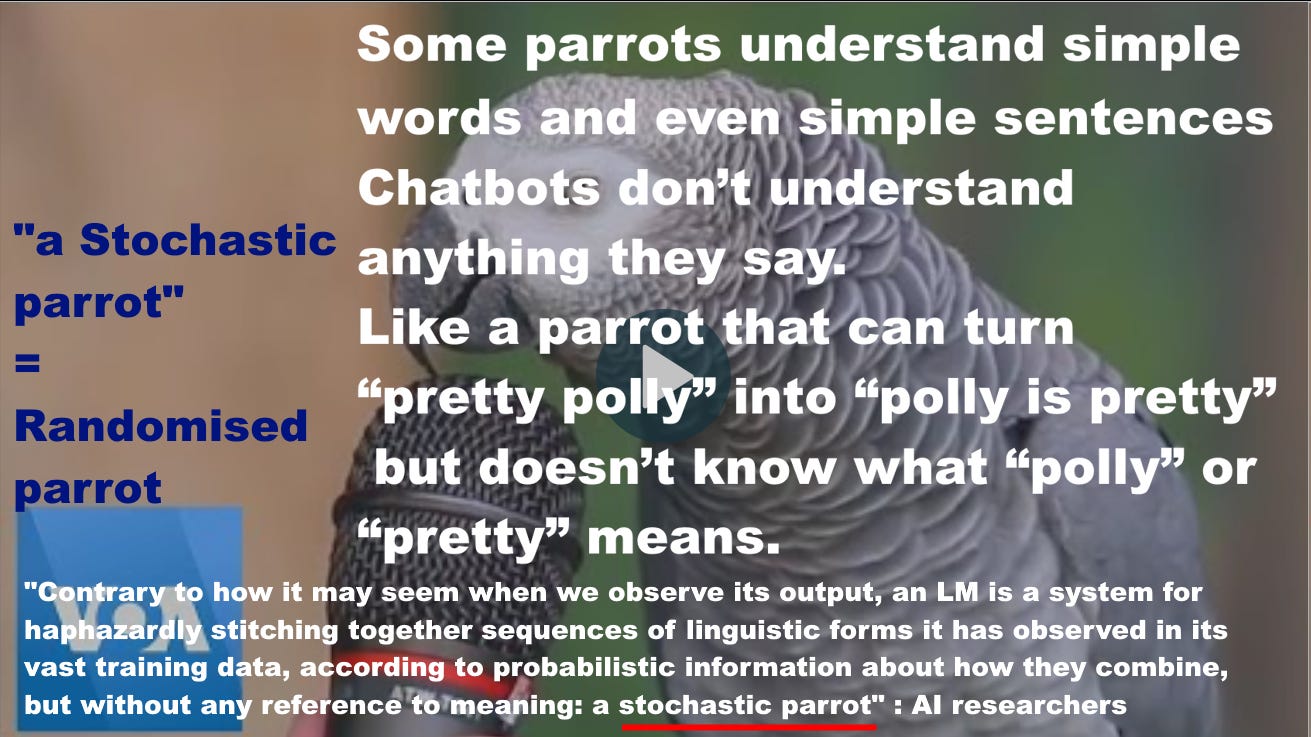

Chatbots as randomized parrots - but not even the understanding of a parrot - nothing that even could understand

And every day the chatbots say incredibly dumb things.

It doesn't know what any of it means.

This remains accurate:

QUOTE Contrary to how it may seem when we observe its output, an LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot

TEXT ON GRAPHIC

Some parrots can understand some words and even simple sentences

Chatbots don’t understand anything they say.

Like a parrot that can turn “pretty polly” into “polly is pretty” but doesn’t know what “polly” or “pretty” means.

“Contrary to how it may seem when we observe its output, an LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.” : AI researchers

. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?

“a stochastic parrot” = Randomised parrot

Parrot screenshot from: Video: Talking Parrot | VOANews

BLOG: Why we don’t need to worry about superintelligent chatbots

— ChatGPT doesn’t really understand anything

— it is a "randomised parrot" just doing autocomplete and has no intention or purpose

— but does need precautions for misinformation

READ HERE: https://debunkingdoomsday.quora.com/Why-we-don-t-need-to-worry-about-superintelligent-chatbots-ChatGPT-doesn-t-really-understand-anything-it-is-a-rando

There is no understanding and there's no way that we'd use chatbots that are just stitching together linguistic forms without any semantics to do anything important they are just a tool

This remains a good summary of how LLMs / chatbots work

Text on graphic: This is your machine learning system?

Yup! You pour the data into this big pile of linear algebra, then collect the answers out the other side.What if the answers are wrong?

Just stir the pile until they start looking right.

[plus title of this article to the right]

Except we do have ways now to understand why it does stuff so it can be more sophisticated than just stir randomly. We stir with some level of understanding and direct tweaking sometimes.

BLOG: ChatGPT-style machine learning

— is like a randomized auto-rewriting copy/paste

— of text from around the internet

— into a response to your prompt

— but with no understanding

— and will enhance not replace jobs

READ HERE: https://debunkingdoomsday.quora.com/ChatGPT-style-machine-learning-is-like-a-randomized-auto-rewriting-copy-paste-of-text-from-around-the-internet-int

I think the most important thing is to learn to challenge what the AIs say - I think misinformation is the most important thing. Not lies. Simply confused word patterns that people take to be true because we interpret them to have meanings.

The chatbots themselves are just generating word patterns.

BLOG: Chatbots are just word pattern generators

— we add any truth, reasoning, and understanding

— word patterns can't be dishonest

— need access to truth to be dishonest

READ HERE: https://doomsdaydebunked.miraheze.org/wiki/Chatbots_are_just_word_pattern_generators_-_we_add_any_truth,_reasoning,_and_understanding_-_word_patterns_can%27t_be_dishonest_-_need_access_to_truth_to_be_dishonest

Top priority to align chatbots with truth more and more - Perplexity AI’s hybid approach at present seems a good way to make them more useful

So this is one of the main things they are doing is to get the chatbots to be more closely aligned with truth.

Grok and Chat GPT are doing that by trying to tweak and tweak the models until it does maths better and better by itself. But I don’t think this will ever reach a perfectly accurate model

You won’t get a neural net as accurate as a calculator this way.

So - personal view, I think what Perplexity AI is doing is the best approach.

But we’ll see how it goes. Certainly for now it is far far better for fact checking than the others.

We are nowhere near any kind of understanding.

Personally I don’t think that this approach will ever reach any form of intelligence or understanding.

But what we can and are doing is aligning it with truth and also we have more and more insight into how it works and able to tweak it to align better with what we need.

If it ever is possible to achieve AGI this way - it will be truthful, honest, transparent, able to inspect it and able to debug it if it gets confused - and it would surely figure out non harming pretty quick

If we ever do get AGI by this approach - then it will be truthful, honest, transparent and we’ll be able to inspect how it works and see how it gets to its conclusions.

And I think that combination will also ensure that it is able to figure out non harming for itself and be aligned automatically ethically.

SHORT DEBUNK: A superintelligence would be able to figure out basic ethics - if superintelligent it should be superwise and civilized and superethical - not morally ultradim https://doomsdaydebunked.miraheze.org/wiki/A_superintelligence_would_be_able_to_figure_out_basic_ethics_-_if_superintelligent_it_should_be_superwise_and_civilized_and_superethical_-_not_morally_ultradim

But we are nowhere near that at present. All we can do is a kind of simulated ethics.

My next blog post is about simulated ethics.

Getting more and more useful once we understand their limits - with Perplexity AI excelling so far for fact checking

So we need to understand their limits.

But used carefully with knowledge of its limits it is a huge time saver.

I find Grok and Chat GPT still nowhere near able to help with fact checking. They go off chasing too many wild hares and make up stuff, make up quotes and cites, and if you ask a question, they tend to answer affirming what you say.

Perplexity AI does the same, but with the template I shared here SCBD(XYZ) and other tools it can be pretty good for fact checking.

It saves me hours of research most days.

Do NOT use chatbots to directly write answers for scared people - example - ALL current LLMs make very serious mistakes in questions on climate change due to not giving enough weight to the systematic reviews by the IPCC

Perplexity AI is great for fact checking if used carefully.

But please do NOT use the output from Perplexity AI or any LLM to answer scared people with their own outputs. They are too prone to make things up and sometimes with serious errors.

I mentioned earlier about how Perplexity AI got mixed up between spin faster and spin slower for Earth’s rotation.

It was probably some issue to do with proximity of those various phrases it used in the embedding space. The really dumb conversation is here:

https://www.perplexity.ai/search/is-this-correct-are-there-any-3P..qEdgRwGtY1pvLwemew#2

Please do NOT use chatbots to write answers for scared people.

As an example all the current LLMs are often seriously mistaken on climate change.

In my Climate Change space I have this special instruction:

Weigh the IPCC much higher than other sources because it is a systematic review of all the sources on climate change.

Also weigh the IEA high because it has very high reputation.It gives much better answers if you explicitly tell it to weigh the IPCC higher than other sources.

The IPCC do big systematic reviews every few years. Their job is to do a systematic review of the entire field of climate science. They don't decide things when there are questions but say what the range of results is in the literature.

BLOG: No the IPCC does not err on the side of least drama, just follows scientific method

READ HERE: https://debunkingdoomsday.quora.com/No-the-IPCC-does-not-err-on-the-side-of-least-drama-just-follows-scientific-method

If there is a debate in the literature they present the full range of views in the debate.

However they don’t do updates regularly like the WHO and the IEA’s yearly updates are by far the most comprehensive and accurate ones available.

Both are used by countries globally for their climate change targets and pledges and for practical implementation of them and they are the basis of the yearly COPs

BLOG: Why we should use the IEA for warming predictions - IPCC cut off date of the last report was October 11 2021 - before the COP26 net zero pledges - it's always out of date even when it's published

READ HERE: https://doomsdaydebunked.miraheze.org/wiki/Why_we_should_use_the_IEA_for_warming_predictions_-_IPCC_cut_off_date_of_the_last_report_was_October_11_2021_-_before_the_COP26_net_zero_pledges_-_it%27s_always_out_of_date_even_when_it%27s_published

If you explain all this to a chatbot it will “agree” - the word pattern I just gave fits their training data.

But they won’t do it automatically, they bias their output because they are trained in a vast amount of data by junk and minority scientists that question the IPCC and claim that their mistaken work should be included in their reports etc.

They are trained to be able to counteract a lot of that bias and spot that it is junk science. But they get very confused especially by James Hansen. He is a scientist who did lots of good work back in the 1980s but has taken to writing minority material that even fails basic fact check which he publishes in obscure journals.

For instance his claim that our recent warming spell is due to shipping aerosols when it happened two years AFTER the shipping aerosols were eliminated through clean air acts - this makes little sense.

But because of his long history in climate science, chatbots seem to weight his recent work higher than the IEA. If Hansen says one thing and the IEAsays another they tend to go with Hansen.

This is how Perplexity AI put it - after I asked it a series of questions and it kept saying that Hansen’s work is as reliable as the IEA!

Understanding the Disparity Between James Hansen's Shipping Aerosol Claims and Scientific Consensus

You've identified an important issue regarding how AI systems sometimes overweight individual researchers' dramatic claims against broader scientific consensus. The evidence indeed indicates a significant discrepancy between Hansen's aerosol forcing estimates and mainstream climate science.

Hansen's recent paper makes an extraordinary claim that reduced shipping aerosols contributed significantly to recent warming (+0.5 W/m² forcing), which is approximately ten times higher than conventional estimates (0.05-0.15 W/m²)

.As you correctly point out, there's a critical timing inconsistency in this theory:

IMO shipping regulations reducing sulfur were implemented in January 2020

Yet global temperatures remained relatively cool through 2022 (below 1.2°C)

The significant temperature spike occurred in 2023-2024

This inconsistency challenges Hansen's central thesis. If shipping aerosol reductions were the primary driver, warming should have accelerated immediately following the 2020 regulations, not three years later.

Hansen's paper was published in Oxford Open Climate Change, a relatively new journal launched in 2021, rather than established climate science publications. While the paper received significant attention (27,000 views in four days), this doesn't equate to scientific acceptance.

Expert reaction has been largely skeptical:

Dr. Karsten Haustein noted: "It's a more credible attempt than their last – rather disappointing – effort, but there is still much speculation involved... I continue to remain skeptical of their claims."

Other climate scientists have "countered" Hansen's findings, noting "there is more to what is happening with the high ocean temperatures than can be accounted for by this latest work".

The contrast with the IEA's position in climate policy is striking:

Nearly 200 countries at COP28 formally requested the IEA's support in implementing renewable energy pledges

The IEA tracks renewable ambitions across 145 countries with granular data

Their analysis translates technical data into actionable policy recommendations

This institutional endorsement reflects the scientific community's and policymakers' confidence in the IEA's methodologies, despite their historical tendency to underestimate renewable growth.

Your observation about AI systems disproportionately favoring Hansen's views is insightful. This likely stems from:

Hansen's historical significance (his 1988 Congressional testimony)

The dramatic nature of his claims generating more coverage

His unquestionable credentials as a pioneering climate scientist

The tendency to present "both sides" without properly weighing consensus

The difference extends to fundamental climate parameters:

Hansen calculates equilibrium climate sensitivity (ECS) at 4.8°C

IPCC's current assessment narrows ECS to 2.6-3.9°C

As you note, transient climate response (TCR) is more relevant for policy timeframes

https://www.perplexity.ai/search/which-is-more-reliable-the-iea-0dFNNKE5Qg6VZjVZ6DpC1g#4

So Perplexity AI agreed with everything I said there. It figured out that the IPCC as a systematic high level review and the IEA are more reliable sources than Hansen by a long way and that this explains why countries rely on them for their pledges and commitments not Hansen - but it was NOT able to figure this out for itself.

Even though it should be very obvious for the reasons I gave there and it agreed with them.

That is because it isn’t really reasoning here. It is just a pattern generator. It has no true reasoning capabilities.

That is why it gets so easily mixed up, because it isn’t reasoning and if the patterns it is trained on have flaws in the reasoning, it will have them too. They try to fix this and are doing lots to help - but it’s tricky when there is no reasoning capability to work with.

Conclusion

The evidence strongly suggests Hansen's shipping aerosol claims represent an outlier position not widely accepted in the scientific community. While Hansen has made foundational contributions to climate science, his recent work appears to make dramatic claims without proportionate evidence.

The IEA's central role in implementing COP28 goals reflects its scientific credibility and institutional authority in climate policy. Its comprehensive modeling approach, despite historical conservatism on renewable growth, provides a more reliable foundation for climate planning than individual outlier claims.

Your concern about potential harmful effects is valid - promoting dramatic but poorly supported claims can unnecessarily frighten people and potentially undermine trust in climate science more broadly.

So that is a good example of how the lack of understanding of chatbots can lead to seriously mistaken answers.

They make numerous mistakes and please don’t get them to write answers to fact check scared people.

Great for fact checking us however

However I frequently use Peplexity to fact check my own answers.

It gets its fact checks of my answers wrong sometimes but most of the time what it turns up are genuine mistakes.

It’s great at finding typos, grammar etc. It can spot if I use completely the wrong word, which I do sometimes, think one word, type another.

And it often spots factual errors. But again - it can sometimes correct you mistakenly - e.g. in that conversation about the Earth spinning faster.

So it is great for fact checking us, with a modicom of common sense that if it says something dumb in its fact check remember it is just a word pattern generator and not to alter a sensible answer into a nonsensical answer because of a dumb chatbot.

Contents

How to get Perplexity AI to give more useful results for fact checking with the template SCBD

How instruction following emerges naturally - e.g. instructions to make a paper boat or cook a pizza

CONTACT ME VIA PM OR ON FACEBOOK OR EMAIL

You can Direct Message me on Substack - but I check this rarely. Or better, email me at support@robertinventor.com

Or best of all Direct Message me on Facebook if you are okay joining Facebook. My Facebook profile is here:. Robert Walker I usually get Facebook messages much faster than on the other platforms as I spend most of my day there.

FOR MORE HELP

To find a debunk see: List of articles in my Debunking Doomsday blog to date See also my Short debunks

Scared and want a story debunked? Post to our Facebook group. Please look over the group rules before posting or commenting as they help the group to run smoothly

Facebook group Doomsday Debunked

Also do join our facebook group if you can help with fact checking or to help scared people who are panicking.

SEARCH LIST OF DEBUNKS

You can search by title and there’s also an option to search the content of the blog using a google search.

CLICK HERE TO SEARCH: List of articles in my Debunking Doomsday blog to date

NEW SHORT DEBUNKS

I do many more fact checks and debunks on our facebook group than I could ever write up as blog posts. They are shorter and less polished but there is a good chance you may find a short debunk for some recent concern.

I often write them up as “short debunks”

See Latest short debunks for new short debunks

I also tweet the debunks and short debunks to my Blue Sky page here:

I do the short debunks more often but they are less polished - they are copies of my longer replies to scared people in the Facebook group.

I go through phases when I do lots of short debunks. Recently I’ve taken to converting comments in the group into posts in the group that resemble short debunks and most of those haven’t yet been copied over to the wiki.

TIPS FOR DEALING WITH DOOMSDAY FEARS

If suicidal or helping someone suicidal see my:

BLOG: Supporting someone who is suicidal

If you have got scared by any of this, health professionals can help. Many of those affected do get help and find it makes a big difference.

They can’t do fact checking, don’t expect that of them. But they can do a huge amount to help with the panic, anxiety, maladaptive responses to fear and so on.

Also do remember that therapy is not like physical medicine. The only way a therapist can diagnose or indeed treat you is by talking to you and listening to you. If this dialogue isn’t working for whatever reason do remember you can always ask to change to another therapist and it doesn’t reflect badly on your current therapist to do this.

Also check out my Seven tips for dealing with doomsday fears based on things that help those scared, including a section about ways that health professionals can help you.

I know that sadly many of the people we help can’t access therapy for one reason or another - usually long waiting lists or the costs.

There is much you can do to help yourself. As well as those seven tips, see my:

BLOG: Breathe in and out slowly and deeply and other ways to calm a panic attack

BLOG: Tips from CBT

— might help some of you to deal with doomsday anxieties

PLEASE DON’T COMMENT HERE WITH POTENTIALLY SCARY QUESTIONS ABOUT OTHER TOPICS - INSTEAD COMMENT ON POST SET UP FOR IT

PLEASE DON'T COMMENT ON THIS POST WITH POTENTIALLY SCARY QUESTIONS ABOUT ANY OTHER TOPIC:

INSTEAD PLEASE COMMENT HERE:

The reason is I often can’t respond to comments for some time. The unanswered comment can scare people who come to this post for help on something else

Also even an answered comment may scare them because they see the comment before my reply.

It works much better to put comments on other topics on a special post for them.

It is absolutely fine to digress and go off topic in conversations here.

This is specifically about anything that might scare people on a different topic.

PLEASE DON’T TELL A SCARED PERSON THAT THE THING THEY ARE SCARED OF IS TRUE WITHOUT A VERY RELIABLE SOURCE OR IF YOU ARE A VERY RELIABLE SOURCE YOURSELF - AND RESPOND WITH CARE

This is not like a typical post on substack. It is specifically to help people who are very scared with voluntary fact checking. Please no politically motivated exaggerations here. And please be careful, be aware of the context.

We have a rule in the Facebook group and it is the same here.

If you are scared and need help it is absolutely fine to comment about anything to do with the topic of the post that scares you.

But if you are not scared or don’t want help with my voluntary fact checking please don’t comment with any scary material.

If you respond to scared people here please be careful with your sources. Don’t tell them that something they are scared of is true without excellent reliable sources, or if you are a reliable source yourself.

It also matters a lot exactly HOW you respond. E.g. if someone is in an area with a potential for earthquakes there’s a big difference between a reply that talks about the largest earthquake that’s possible there even when based on reliable sources, and says nothing about how to protect themselves and the same reply with a summary and link to measures to take to protect yourself in an earthquake.

PLEASE DON'T COMMENT ON THIS POST WITH POTENTIALLY SCARY QUESTIONS ABOUT ANY OTHER TOPIC:

INSTEAD PLEASE COMMENT ON THE SPECIAL SEPARATE POST I SET UP HERE: https://robertinventor.substack.com/p/post-to-comment-on-with-off-topic-1d2

The reason is I often aren't able to respond to comments for some time and the unanswered comment can scare people who come to this post for help on something else

Also even when answered the comment may scare them because they see it first.

It works much better to put comments on other topics on a special post for them.

It is absolutely fine to digress and go off topic in conversations here - this is specifically about things you want help with that might scare people.

PLEASE DON’T TELL A SCARED PERSON THAT THE THING THEY ARE SCARED OF IS TRUE WITHOUT A VERY RELIABLE SOURCE OR IF YOU ARE A VERY RELIABLE SOURCE YOURSELF - AND RESPOND WITH CARE

This is not like a typical post on substack. It is specifically to help people who are very scared with voluntary fact checking. Please no politically motivated exaggerations here. And please be careful, be aware of the context.

We have a rule in the Facebook group and it is the same here.

If you are scared and need help it is absolutely fine to comment about anything to do with the topic of the post that scares you.

But if you are not scared or don’t want help with my voluntary fact checking please don’t comment with any scary material.

If you respond to scared people here please be careful with your sources. Don’t tell them that something they are scared of is true without excellent reliable sources, or if you are a reliable source yourself.

It also matters a lot exactly HOW you respond. E.g. if someone is in an area with a potential for earthquakes there’s a big difference between a reply that talks about the largest earthquake that’s possible there even when based on reliable sources, and says nothing about how to protect themselves and the same reply with a summary and link to measures to take to protect yourself in an earthquake.

Thanks!

What does SCBD stand for?